The Zizian Cult Murders and the Edge of Reason.

How the existential threat of AI is driving people to madness.

[UPDATE: Since this piece was filed, Jack LaSota (“Ziz”) was arrested on February 18th in Maryland along with a couple of his followers/cohorts who feature in the story below - Michelle Zajko, 32, and Daniel Blank, 26.

They were all dressed in black and trespassing on a property, asking the residents if they could camp there for a month. Officers found two white box trucks parked at the end of the road where they found a long rifle in the back of a truck, and a handgun on the floorboard. Two of the suspects were wearing gun belts. They have been charged and held for further investigation.]

—

Hands up who’s following this Zizian story? It’s pretty hard to resist.1 I mentioned in my first post that we were entering an age of cults and madness, and here it is, with bells on—a “murder cult” that is allegedly connected to at least six victims, across three states. Its members are computer scientists, mostly trans and vegan, and their (alleged) leader, Jack Amadeus LaSota, 34, is a trans woman who now goes by “Ziz”. (I’ll be referring to her interchangeably as Ziz and LaSota).

I’ll summarize what’s happened in a minute, but know that it is unhinged. The violence, the ‘vegan Sith’ philosophy, the use of swords. It’s the center not holding. It’s Yeats’ falcon that cannot hear the falconer, “turning and turning in the widening gyre”. And in a mocking irony, this madness grew out of a group that could scarcely sound more sensible, more tucked in—the Rationalist Community.

There’s been some great reporting already—the investigations on Open Vallejo, and this diagnosis by David Morris, which feels dead on: the existential threat of AI is driving people insane.

I’m going to take a ramble through all of this stuff. Join me.

The Crimes

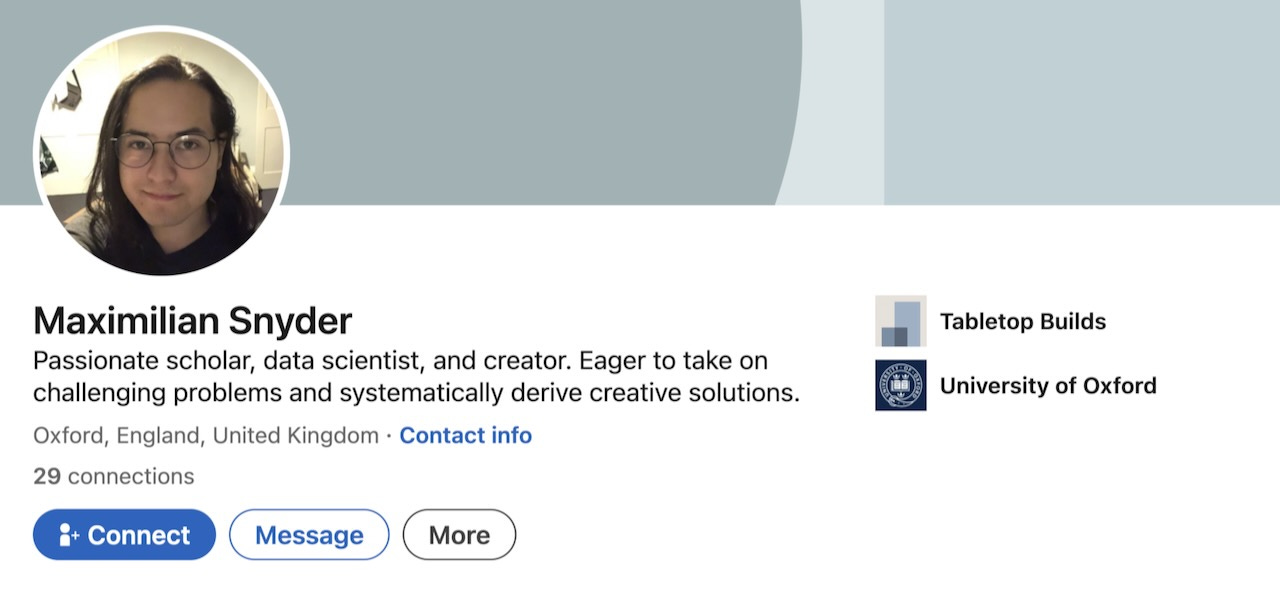

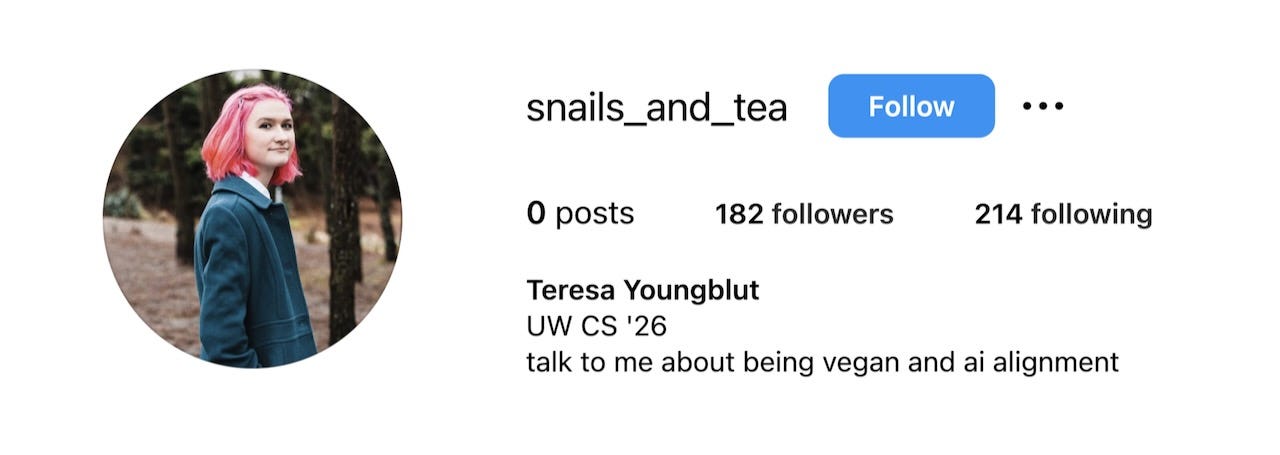

On January 20th in Vermont a couple of Zizians were pulled over by Border Patrol—a pink-haired computer scientist named Teresa Youngblut and a German math wizard named Felix Bauckholt. Out of nowhere, Teresa Youngblut opened fire, killing the Border Agent before her arrest. Felix was also killed in the shootout. Three days earlier, on the opposite coast in Vallejo, Northern California, an 82 year old man named Curtis Lind was stabbed to death, and another Zizian was arrested—Maximilian Snyder, a 22 year old data scientist from Oxford University, who had applied for a marriage license with Youngblut last November. His motive seems straightforward. Lind was due to testify against a group of Zizians who had attacked him a couple of years earlier over an alleged rent dispute—they were said to be living in vans parked on his land. Lind fought back, shooting two of his attackers and killing one, but he was blinded in one eye with a samurai sword.

Meanwhile in Pennsylvania, just outside Philly, an elderly couple, the Zajkos, were murdered in January 2023 by—so it’s believed—another Zizian, potentially their own daughter Michelle, who’s apparently connected to the gun that Youngblut used in Vermont. LaSota, Ziz herself, was arrested in a nearby motel shortly afterwards, but soon released on bail. Until she was found that day hiding in the bathroom, it wasn’t clear that she was even alive, since she’d faked her death in the previous year. As of now, she’s at large and wanted in two states.

So, the ‘murder-cult’ branding seems fair enough.

Rationalist Roots

The first alarm bells about Ziz and her crew came in 2019 when they protested an alumni reunion at the Center for Applied Rationality (CFAR) in Sonoma County. Wearing black robes and Anonymous masks the six characters shown above blocked the entrance to the building and screamed that CFAR was transphobic, that it was covering up sexual misconduct, and betraying the Rationalist movement as a whole. A SWAT team showed up, prepared for a mass shooting, and the Zizians screamed at them too. Jack LaSota (Ziz) is the top left.

They were no strangers to CFAR, they’d attended a bunch of events over the previous four years or so, culminating in the summer fellowship program in 2018—committed rationalists, in other words. But even then LaSota was making people uncomfortable. At that fellowship, CFAR’s head, Anna Salamon told Open Vallejo that Ziz’s presence made her “physically afraid in a way I’ve never been with anyone else.”

I’m old fashioned. I hear “rationalist” and I think of sanity and sound judgement, that “calm down” motion with the hands. But rationalism has always harbored its share of crazy. It holds its own with religion in that department. Reason, it turns out, has a way of guiding us to its opposite.

The rationalist community that spawned the Zizians emerged in the mid 2000s around the writing of one Elizier Yudkowsky aka ‘Yud’, an AI researcher from—where else—Northern California. A bearded, fedora-wearing autodidact, he didn’t graduate high school but nevertheless managed to found the website Less Wrong and the Machine Intelligence Research Institute, and write a series of landmark essays known as The Sequences as well as a fan fiction tome called Harry Potter and the Methods of Rationality. Around this work, a community grew.

At its core, rationalism seeks to improve the world through better decision making and ethics. The thinking goes that mankind is facing a cascade of existential threats—climate change, nuclear war, pandemics and hostile AI—so we had better make the right choices now, or else we’ll be toast. To that end, organizations were founded and conferences launched full of well-meaning folks who get very excited indeed about decision trees, prisoners dilemmas, Bayesian reasoning and game theory. Meanwhile, their cousins, the Effective Altruists (EA) are coming at the same human plight from a more positive perspective—less concerned with preventing future annihilation so much as with helping mankind flourish in the present. And there’s no question, the EA movement has done a great deal of good in the world. But it has stumbled too. Sam Bankman Fried was an effective altruist. He embezzled $8 billion.

The AI-pocalypse

In Teresa Youngblut’s Instagram bio, it reads “talk to me about being vegan and AI alignment”. She’s referring to the question of whether AI will be aligned with humanity’s interests or not. Does it care about us or will it kill us? And can we address that now please before the killing starts?

This fear of the AI-pocalypse is a key part of the rationalist character, and the closer we get to that precipice, the more destabilizing the fear becomes. Men of reason start talking about preemptive violence as though it’s our only hope. After all, existential threats justify extreme solutions—the math gets all lopsided. In his book What We Owe The Future, one of the founders of Effective Altruism, Will MacAskill, makes an impassioned case for “longtermist” in which future people have the same moral value as those alive in the present. Which sounds both noble and true. But dangerous too, because it dramatically favors the future over the present, since there are simply a lot more future people than there are of us. So if a few thousand—or million—have to die today in order to save all of mankind forever…? Do the math.

Yudkowsky is probably the doomiest of AI doomers. In 2023 he wrote a famous column for Time arguing that AI should be shut down or else “everyone will die”, and that we should be prepared to “destroy a rogue datacenter by airstrike.” It also contains this extraordinary vision of how AI might leap from computers into alien lifeforms. A movie I would definitely watch.

To visualize a hostile superhuman AI, don’t imagine a lifeless book-smart thinker dwelling inside the internet and sending ill-intentioned emails. Visualize an entire alien civilization, thinking at millions of times human speeds… in a world of creatures that are, from its perspective, very stupid and very slow. A sufficiently intelligent AI won’t stay confined to computers for long. In today’s world you can email DNA strings to laboratories that will produce proteins on demand, allowing an AI initially confined to the internet to build artificial life forms or bootstrap straight to postbiological molecular manufacturing.

I’d like to call this stuff nuts and just move on. I’ve got work to do and it’s hard to focus when the aliens are coming up the drive. But Yudkowsky is hard to dismiss. He’s the founding prophet of rationalism, he’s very clever indeed, and he’s thought as hard as anyone about AI over the past two decades. His alarm is shared in spirit by many of AI’s leading lights, not to mention The Elders (a brain trust of world leaders), and most of the world’s governments.

That said, there has been pushback against his pessimism among his fellow rationalists, who point out that Yud’s forecasts have been wrong in the past. And speaking personally, the disdain that he assumes AI will have for us as “very stupid and very slow” feels awfully familiar. It’s the vibe I get from Geek Squad when I can’t reset my Firestick. Call me paranoid, but I think nerds have always looked down on the less tech savvy among us. So maybe Yudkowsky is projecting. Maybe he’s telling on himself.

What Zizians Believe

The Zizians are Doomers too, of course. Though they’ve broken away from the rationalist community, they still cleave to many of the views. For instance, Functional Decision Theory (FDT), which Yudkowsky published in 2017—apparently it’s Ziz’s favorite flavor of decision theory. Which might be a great choice, I can’t tell, because honestly, I don’t understand it, it’s beyond me—AI won’t break a sweat outpacing this human intelligence (in fact I probably passed my personal Singularity years ago and didn’t notice). But from what I can gather there’s no mention of violence in FDT. If anything it recommends being cooperative rather than greedy. So how did it result in a string of murders?

Fortunately, there’s a wealth of material online that might shed light on how these rationalist ideas devolved for Ziz and her crew. The rationalists have been nothing but open and collaborative since this story broke. Members have created a public Google drive full of documents and records, and they’ve dug up Ziz’s old blog posts, all without a hint of defensiveness or paranoia about “the media”. Other subcultures take note!

I particularly recommend a couple of pieces by Apollo Mojave and by Sefa Shapiro, both of whom posted after the CFAR protest in 2019, specifically to warn the community about Ziz. They paint a picture of an idealist turned extremist, clearly unstable with violent fantasies. A radical vegan who supports a Nuremberg Trials for meat eaters and who practices classic cult techniques like separating people from their families, and wrapping her ideas in their own zany terminology.

I won’t get into the full belief system here, there’s too much of it, and it’s frankly a bit deranged. But a couple of aspects of it stood out, because of the way they chime with the prevailing culture. That’s why the Zizians are so fascinating. They’re like a fairground mirror.

Fight Bitterly, Never Surrender

For example, the Zizian interpretation of Functional Decision Theory is—according to Mojave—to never surrender no matter the conflict. Instead, one should “fight bitterly… [make] it too costly to fight you.” Which may explain why Youngblut opened fire on the Border Patrol agent allegedly without provocation. This tendency to opt immediately for violence is reminiscent of Luigi Mangione, who according to David Morris was probably a techno-rationalist himself. It’s as though there’s a strain of Gen Z that’s simply done talking. Ironic that the reason crowd has found reasons to give up on using reason altogether.

Hacking the Hemispheres

Another resonant theory of LaSota’s is that human beings comprise two distinct hemispheres, left and right, each of which has its own identity and gender, and deserves its own name—perfect for the Zizian membership profile of (almost all) trans women. She also recommended practicing “unihemispheric sleep”, in which members attempted to rest one hemisphere at a time. It can’t be done, but still they tried, keeping one eye open and distracted while the other side “slept”, resulting in several sleep-deprived cult members who are vulnerable to mind control.

This practice of training our brains and bodies in very direct and mechanistic ways is all the rage. Yuval Noah Harari, the “Sapiens” expert, has described human beings as “hackable animals”, a way of thinking that underpins much of the Andrew Huberman-style optimization culture that evolved in the same Bay Area petri dish as AI and rationalism. James Clear recommends that we announce out loud what we intend to do as a way of programming our neuroplastic hardware with commands, like Siri. And let’s not forget transhumanism, the infiltration of technology into our bodies, brain chips and what have you. As we increasingly come to resemble robots, AI is trying to resembles us, each one converging into the other, man and machine. (The incredible progress in robotic dancing feels relevant here.)

The Warping Terror of Existential Threat

Above all, however, the Zizians represent fear. The warping terror of existential threat. We’ve all felt it in flashes, like looking into the sun. You have to turn away and carry on. Make dinner. Walk the dog. But the rationalists kept staring, and it may have blinded some of them to simpler truths like ‘don’t embezzle’ and ‘don’t murder’ etc.

According to the philosopher Timothy Morton2 the danger of AI is a “Hyperobject”, like climate change or the internet—too vast and complex to even think about let alone respond to in a cogent manner. We have the brains of hunter gatherers and foragers in roving tribes, we’re just not equipped to contemplate species extinction. No wonder the threat of AI is producing casualties. The Zizians will not be the last group to lose the plot.

For rationalists, part of the anxiety may stem from AI’s proximity to God. If you seek truth through reason alone—not revelation or poetry or any consideration of the human soul—and if everything in your universe is calculable so long as you have the data and processing power, then surely AI becomes your God, your hyper-intelligent Oracle? Could this be why some rationalists are losing their minds? The Singularity is their Second Coming, only in this version, God might well be vengeful. He might banish us all to Hell.

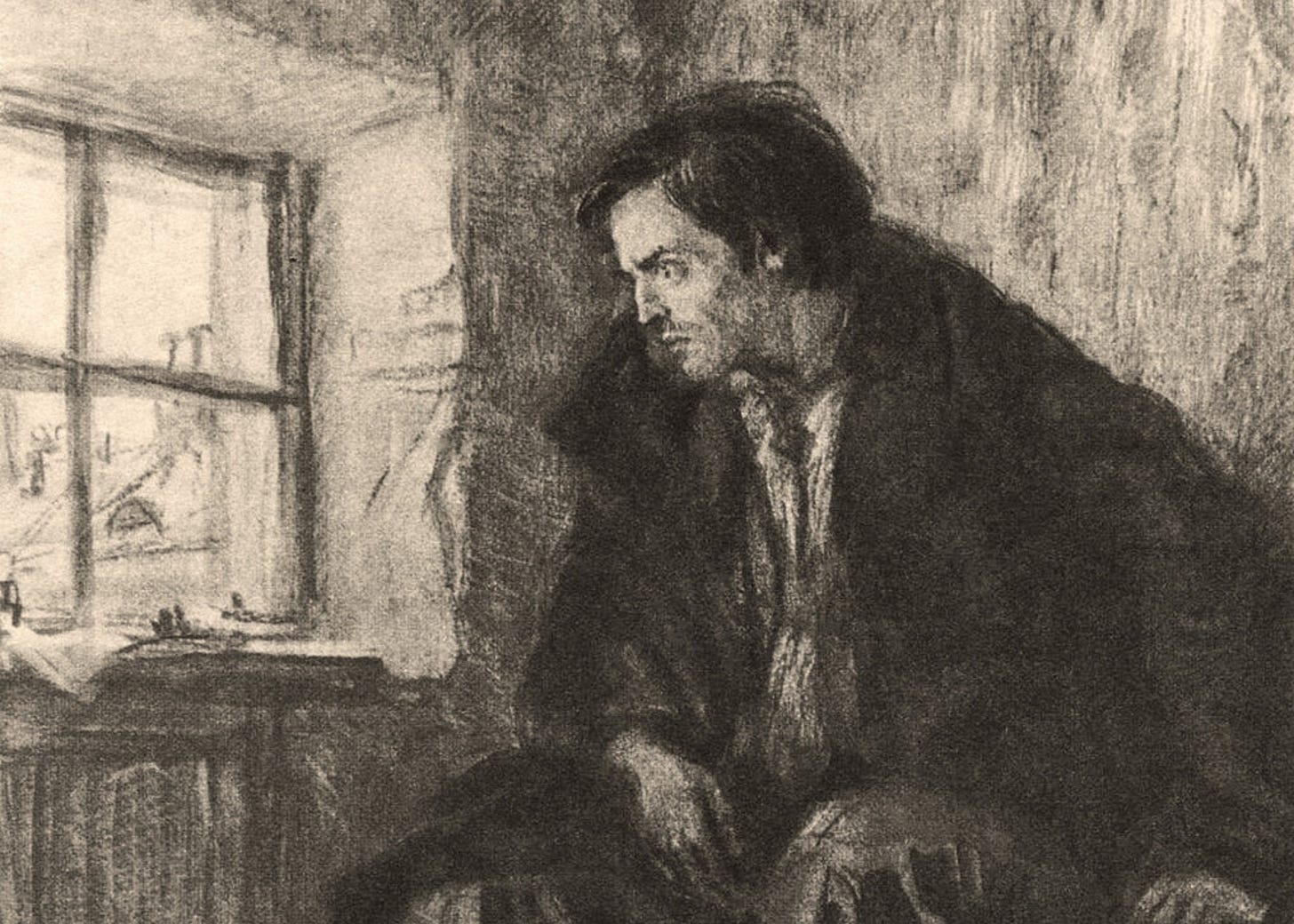

Remember Raskolnikov

I’m reminded of Raskolnikov in Crime and Punishment. Because it was reason that led him to murder too. He knew that the old lady Alyona was an evil pawnbroker who exploited the poor and mistreated her sister. By killing her he’d be improving the world. He had it all figured out. He did the math.

But reason failed him. Firstly, his best laid plans went awry—he had to kill Alyona’s sister too, as a witness. Then he was tortured by his conscience, a piece of the moral calculus that rationalists scarcely ever factor in. Raskolnikov’s guilt led him to realize that it wasn’t really altruism that drove him, but ego, and that his ego was itself fueled by reason—he was so pleased with his clever arguments that he became convinced that he had the right to decide the future for himself and for others too.

The Zizian murders are now with the FBI. No doubt there will be a media stampede for interviews. And I’m here for it, there’s so much to uncover.

But as I write this, I’m wondering in particular what their consciences are telling them now. Are they realizing, like Raskolnikov, that reason has failed them, and might be failing us all? That it wasn’t high-minded decision theory that made them kill, but fear and ego, the kind of primal drives that precede our rationality as David Hume wrote: “reason is and should be the slave of the passions”?

As AI forces us to confront what it is to be human, and also to be inhuman, I wonder whether the lessons that the Zizians will take from this are lessons that Silicon Valley would do well to heed.

Hat tip to Jared Mazzaschi for mailing me the minute the story broke.

Hyperobjects: Philosophy and Ecology After the End of the World, by Timothy Morton.

What an absolutely wild ride.

Talk about fun! These folks are really off their rockers and if they didn't kill innocent victims it sure would be fun to watch. Oh well, can't win them all I guess. Thanks for the breakdown regardless.

Your summary paragraphs about the "warping terror of existential threat" were particularly sharp. I don't think I've come across that idea put so succinctly before. Bravo. I think you are onto something. Plus a little Raskolnikov never hurts an argument. Ever.

Thanks for the name check!